Redesigning AI-Powered Image Generation Tools to Improve Outputs

How to improve the results of AI image generators

Recently OpenAI has set the internet on fire with its latest version of ImageGen. The images they’re generating are remarkable but the tool is pretty basic from a user perspective: you type in a prompt and get an image out.

To figure out how to improve how to improve this you need to understand the 3 key problems:

The Weaknesses w/ Natural Language Image Gen

1) Chat has inherent weaknesses

Pure text-based interfaces are pretty limiting in helping users describe complex requirements.

Take this image for example. Say I want to adjust the shape or make the red elements more vivid, how do I ask it to do this? What does vivid even mean in this case?

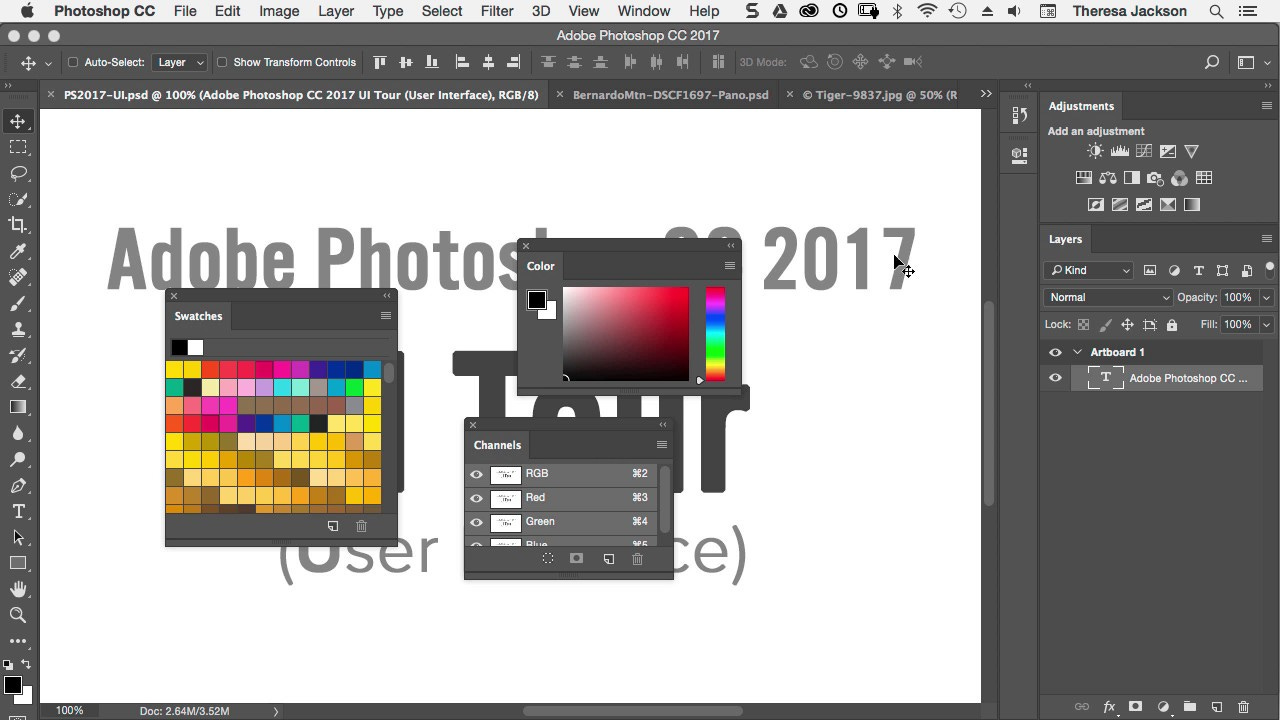

In the world of traditional photo editing, tools have been built out to give us greater, more granular control on the specific elements.

You’re able to select a specific part and then use a colour picker to play with different colours to get your desired result. This gives the user instant feedback vs waiting 20 seconds for a whole new image to be generated and the colour grid allows a user to understand what sort of change will happen before they make it.

In this case if I drag the colour wheel down the red will become darker. If I drag it left, the element will become lighter and closer to white.

2) Massively complex workflows

The second problem is that the natural UI inspiration, or starting point, for AI image generators would be photo editing tools like Photoshop and Canva.

Incumbent tools, Photoshop more specifically, have all the granularity but are designed for professionals, with thousands of buttons and sub-menus. The majority of people using AI image generators aren’t professional graphic designers and so can’t be expected to move up the rather steep learning curve.

3) Doesn’t give users ideas on how to improve prompts

The next major issue is prompting. It’s a well known fact that much like answers from LLMs, the quality of image you get out is based on the prompt you feed in.

No tool helps with this right now.

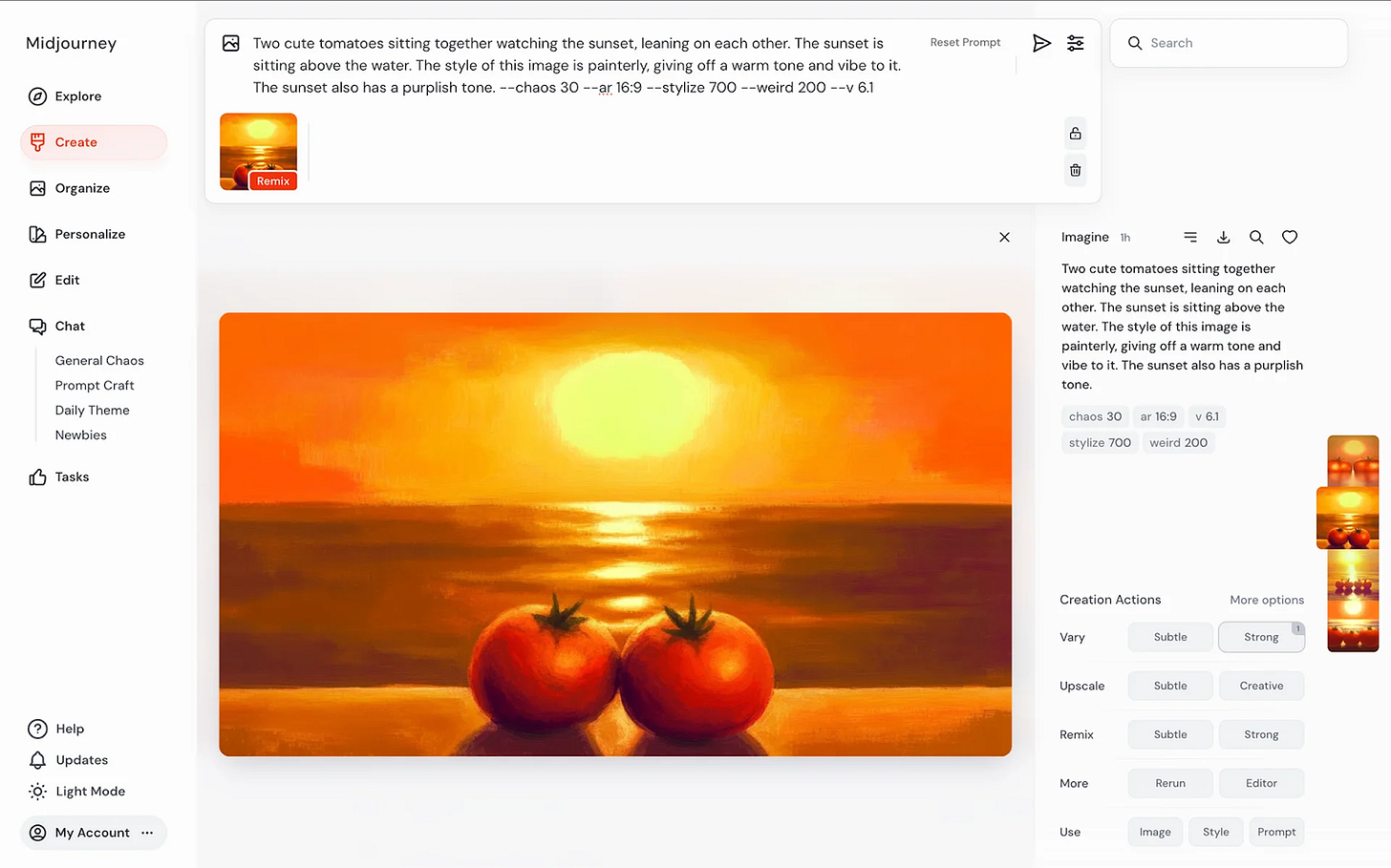

Midjourney goes the furthest by giving you some buttons to help adjust things like aspect ratio but they don’t help you improve your prompt, allow you to select a style or a certain colour pallet you’d like the image to be generated in.

It still leaves the user with a blank canvas, expecting them to know the perfect concoction of words to create a masterpiece.

Why Focus on Helping Users Improve Prompts?

✅ Customer NPS: More granular controls give you more predictable outcomes and lead to a greater sense of control, thus increasing NPS.

✅ Lower Time to Value: Time to value with AI image generators is likely the moment a user gets a photo they like. Instead of forcing them to blindly navigate constructing the perfect prompt you can help with prompt improvements.

✅ Retention: Reliable outcomes and pieces that are publish ready lead to greater retention.

So How Could We Help Improve User’s Prompts?

1) Programmatic Questions

Taking inspiration from Deep Research, image generators could ask users questions to improve the quality of the prompt.

Take this image I generated for example. The prompt was simply “3 characters in beautiful oil painting of zelda style world, bright blue skies”

However, there are so many questions that could be asked to help you, what type of characters? What action are they doing? What’s the implied narrative of the picture?

To illustrate this here are two images with the same base prompt, with only these additional prompt elements changed. They’re two completely different images.

Here’s what it may look like:

Give the users buttons to easily add additions to a prompt, whilst letting them retain the ability to edit their prompt in natural language if they have further inspiration.

2) Annotate specific parts like Figma comments

Or, if the user isn’t looking for tweaks to the prompt, but is looking to perfect an image then why not take inspiration from multiplayer features like comments in Figma?

Enable users to point, or highlight a specific section of an image and add comments for the image model. Give users granular control: point to a section and specify the desired colours, or remove items from the image.